Why spatial data accuracy matters

(Pretoria, South Africa – 25 June 2024)

Data quality and trust are non-negotiable in a data-driven world

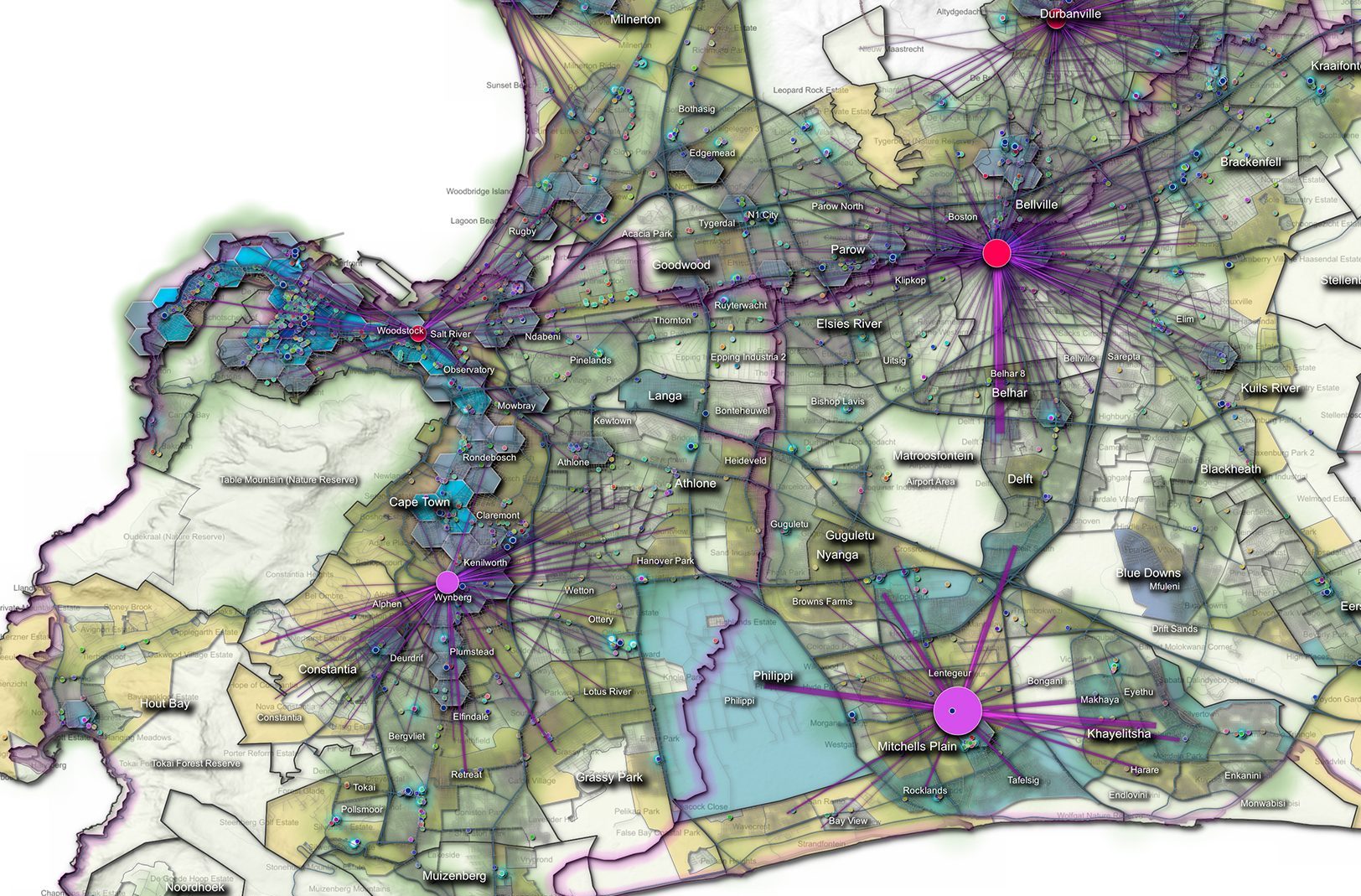

Decisions, strategies, and innovations increasingly rely on the analysis and interpretation of vast amounts of data. This data-centric mindset is vital for geospatial information solutions, which depend on accurate, high-quality spatial data to map, analyse, and interpret geographical information.

Accurate data forms the foundation of urban planning, disaster response, environmental management, and logistics, ensuring actions are based on precise and reliable information. The integrity and trustworthiness of GIS data directly influence the success of spatial analysis and decision-making processes.

“Data quality underpins the reliability of information,” says Marna Roos, Senior Client Consultant & Standards Enthusiast at AfriGIS. “It determines whether the information can be trusted and used for critical decision-making.”

Trust in spatial data is built on hard facts. Maintenance schedules and regular dataset updates provide good indicators of reliability. Without a history of data and version control, outdated information can lead to significant problems, undermining trust.

Different industries have varying requirements for data quality. Emergency services prioritise positional accuracy, logistics companies focus on completeness and temporal quality, and retail companies emphasise thematic accuracy and usability. Understanding these priorities helps tailor data services to meet specific industry needs.

Ensuring high-quality data

Geospatial information providers must prioritise transparency and continuous improvement. “We revisit datasets regularly to update and improve them,” says Roos. “Positional accuracy relies not just on coordinates but also on confidence levels indicating the precision of those coordinates.” Confidence levels range from 1 to 10, with 1 being the highest accuracy and 10 indicating very vague data. For instance, a confidence level of 1 indicates the exact address, while a level of 2 might denote the correct land parcel but not the specific building. This approach ensures that clients can depend on the data for critical decisions.

“Most of our clients require data at confidence levels 1 or 2 for precise applications, such as emergency response or detailed analytics,” Roos adds. “However, for broader analyses, lower confidence levels might be sufficient. It’s about matching the data quality to the specific needs of the client.”

Interestingly, higher data quality doesn’t always come with a higher price tag.

“Clients pay for the data available, and we offer various services to clean and geocode this data. Automated geocoding is less expensive, while manual geocoding, which provides higher accuracy, costs more. This sliding scale allows clients to select the level of accuracy they require without incurring unnecessary expenses.”

One significant challenge is ensuring data completeness and consistency. An address needs to include all relevant details – province, municipality, town, street name, and number. Inconsistent or incomplete data can lead to significant issues, especially in a country like South Africa, where many places have similar names. Another challenge is keeping data up to date, requiring constant monitoring and updates to reflect real-world changes.

“We had a case where a company was attempting to sell its client base, claiming a certain number of customers,” Roos recalls. “Upon evaluating their data, we discovered that over 50% of it was inaccurate or duplicated. The actual number of customers was significantly lower than claimed. This evaluation prevented the buyer from making a costly mistake based on false data. It’s a perfect example of why data quality is so important.”

Risks of relying on free data

Surprisingly, many large firms rely on free data, which can be problematic. Crowdsourced data can vary significantly in quality because contributors may lack expertise in geospatial data, leading to inaccuracies or inconsistencies. Researchers have questioned the reliability and fitness for use of crowdsourced data due to its diverse origins and the varying levels of expertise among contributors. Additionally, crowdsourced data may exhibit spatial biases, with certain areas being overrepresented while others are underrepresented.

“Free data is not reliable,” says Roos. “It’s aggregated from various sources without rigorous quality checks, making it less trustworthy. For instance, while free GIS data sets can be useful for general purposes, they are not always suitable for high-stakes decisions due to their lack of guaranteed accuracy.”

Technological advancements, such as AI and machine learning, are significantly improving data quality by automating data cleaning and geocoding processes. These technologies also help identify patterns and anomalies in large datasets.

Despite technological advancements, however, manual processes remain essential, especially for ensuring the highest levels of accuracy. Manual geocoding allows for more detailed and precise data placement, and human oversight is also important for validating and verifying automated processes.

The future of data quality and trust

The demand for high-quality, trustworthy data will continue to grow as more industries rely on data for decision-making. Consequently, the standards for data accuracy and completeness will become even stricter. Transparency and regular updates will be key to maintaining trust. Integrating new technologies like AI and machine learning will help automate and improve data quality processes, making it easier to manage large datasets efficiently.

Roos advises organisations to ensure their geospatial data providers invest in regular data audits and updates, ensure clear maintenance schedules, and maintain transparency about the accuracy and completeness of their data. “Positional accuracy, completeness, logical consistency, temporal quality, thematic accuracy, and usability ensure the data points are in the correct locations, include all necessary information, follow the correct format, accurately represent real-world entities, and are easy to use.”